Table of contents

No headings in the article.

Time Complexity is one of the most important and key topic in Data Structure and Algorithm.

Either you are seating for an interview or solving an algorithmic problem it is expected to know and understand the time complexity of the algorithm

It's true that a problem can be solved in multiple ways so it become very important to learn and understand which data structure and algorithms to be used to solve a particular problem.

Lets start with some basic definition

What is Data Structure?

Data Structure is a way of organizing the data so that it can be used effectively. Example of data structure Array,Linked List,Queue,Stack, Map...

What is an algorithms?

Algorithms is a set of rules or instructions that defines how a work is executed in order to get the desired output.

Examples of Algorithms- Search Algorithm,Sorting Algorithms,Traversal Algorithms etc...

There are different search algorithms like Linear Search,Binary Search Algorithm. Before implementing an algorithm we have to make some decision based on time the code takes to execute and the space it takes. We have to choose the best algorithm which takes least time for search, and one of the factors to decide an algorithm is Time Complexity of an Algorithm.

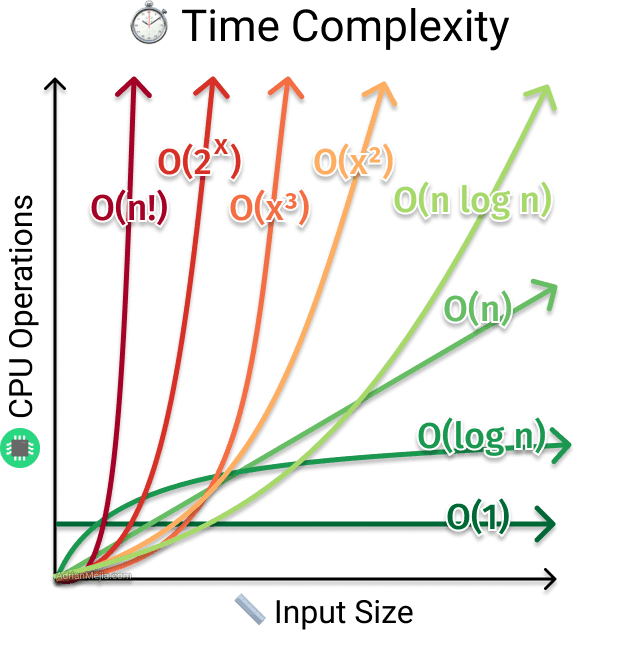

Time Complexity

Time Complexity is the measure of number of operations an algorithm is performing to complete its task Lower the number of operations to complete a task more the efficient an algorithm is.

It is measured in terms of length of input 'n'.

For example

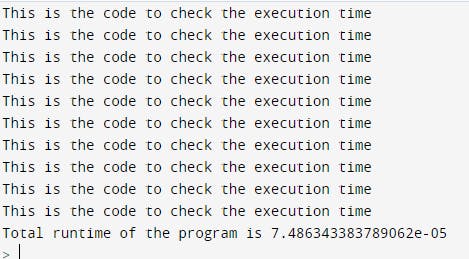

import time

# store starting time

begin = time.time()

# program body starts

for i in range(10): # Number of operation 10

print("This is the code to check the execution time")

# program body ends

# store end time

end = time.time()

# total time taken

print(f"Total runtime of the program is {end - begin}")

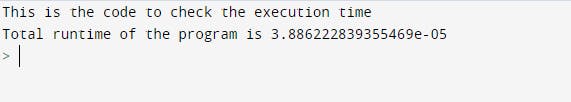

import time

# store starting time

begin = time.time()

# program body starts

# Number of operation 1

print("This is the code to check the execution time")

# program body ends

# store end time

end = time.time()

# total time taken

print(f"Total runtime of the program is {end - begin}")

As you can see in the first example there are 10 operations while in the second example there is only 1 operation. Here the time taken for 10 operations is more than 1 operation

The time required by an algorithm falls under three types −

Best Case − Minimum time required for program execution.

Average Case − Average time required for program execution.

Worst Case − Maximum time required for program execution.

To calculate the time complexity of algorithm we do asymptomatic analysis of algorithm. Using it we can calculate all different type of scenario mentioned above.

This analysis is based on input length . If there is no input given it concludes that it will work in constant time.The analysis assumes all other factors as constant. The other factors can be server load, operating system all these factors are considered constant for the analysis.

Asymptomatic Notation

Below 3 are commonly used to calculate the running time complexity of an algorithm.

Ο Notation-->Big Oh Notation It measures the longest time or the worst time complexity an algorithm can take to run

Ω Notation-->Omega Notation It measures the best amount of time or the best time complexity an algorithm can take to run.

θ Notation-->Theta Notation Its a formal way to express both lower bound and upper bound of an algorithm.

Lets understand the time complexity in little easier way.

Assume you have 5 siblings and your mother give 1 apple to any one of your sibling.Now your mother want that apple so that she can give it to the guests.

O(1): She can go and take the apple from child who she gave it.That means irrespective of number of children the time to get the apple will remain same

Now suppose the mother don't remember whom she has given it.

o(n^2) :She goes and ask the first one if he has the apple, also she ask him about the other 4 children if they have the apple. She does the same with every child.

O(n):She goes and ask each child if they have the apple one by one

O(logn): She divides the children into two group and ask if the apple is in left group or the right group. She repeat this process until she find the child who has the apple.In each operation she is dividing the children into half.

Following is a list of some common asymptotic notations −

Constant --> Ο(1)

Logarithmic --> Ο(log n)

Linear--> Ο(n)

QuasiLinear --> Ο(n log n)

Quadratic --> Ο(n^2)

Cubic --> Ο(n^3)

Polynomial--> n^Ο(1)

Exponential--> 2^Ο(n)